fuse-dfs挂载全过程

发布时间:2014-09-05 14:21:32作者:知识屋

fuse-dfs挂载全过程

fuse-dfs挂载终于成功了,断断续续弄了两周多,而最后的一步挂载出错花了我一周时间,加了个HDFS QQ群问了之后才知道哪里弄错了,且听细细道来。

fuse-dfs挂载全过程

准备工作:

CentOS 6.3,Hadoop 1.2.0, jdk 1.6.0_45,fuse 2.8.4,ant 1.9.1

1.安装fuse

yum install fuse fuse-libs fuse-devel

2.安装ant

官网下载,解压

3.系统配置

vi /etc/profile

最后添加:

export OS_ARCH=i386 #如果是64位机器填amd64

export OS_BIT=32 #64

export JAVA_HOME=/usr/java/jdk1.6.0_45

export ANT_HOME=/usr/ant

export PATH=$JAVA_HOME/bin:$ANT_HOME/bin:$PATH

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$HADOOP_HOME/lib:$HADOOP_HOME:$CLASSPATH

export HADOOP_HOME=/usr/hadoop

export LD_LIBRARY_PATH=$JAVA_HOME/jre/lib/$OS_ARCH/server:$HADOOP_HOME/c++/Linux-$OS_ARCH-$OS_BIT/lib:/usr/local/lib:/usr/lib

退出,编译 source /etc/profile

4.编译libhdfs

cd $HADOOP_HOME

ant compile-c++-libhdfs -Dlibhdfs=1 -Dcompile.c++=1

ln -s c++/Linux-$OS_ARCH-$OS_BIT/lib build/libhdfs

ant compile-contrib -Dlibhdfs=1 -Dfusedfs=1

(提示:1.如果编译没通过,缺少依赖包,yum install automakeautoconf m4 libtool pkgconfig fuse fuse-devel fuse-libs

2.在安装的过程中还要安装gcc 。编译成功会提示 build successful,看到这句心情非常愉悦)

5.环境配置

cd $HADOOP_HOME/build/contrib/fuse-dfs

vi fuse_dfs_wrapper.sh

在文件最前面添加:

export JAVA_HOME=/usr/java/jdk1.6.0_45

export HADOOP_HOME=/usr/hadoop

export HADOOP_CONF_DIR=/usr/hadoop/conf

export OS_ARCH=i386

export OS_BIT=32

把最后一句“./fuse_dfs$@” 改成 “fuse_dfs@”

6.添加权限

$chmod +x /usr/hadoop/build/contrib/fuse-dfs/fuse_dfs_wrapper.sh

$chmod +x /usr/hadoop/build/contrib/fuse-dfs/fuse_dfs

$ln -s /usr/hadoop/build/contrib/fuse-dfs/fuse_dfs_wrapper.sh /usr/local/bin

$ln -s /usr/hadoop/build/contrib/fuse-dfs/fuse_dfs /usr/local/bin/

7.挂载

mkdir /mnt/dfs

cd $HADOOP_HOME/build/contrib/fuse-dfs

fuse_dfs_wrapper.sh dfs://localhost:9000 /mnt/dfs

(就是这最后一步!!糊弄了我一周!!关于fuse_dfs_wrapper.sh后面跟的这个链接,我一直遵循)conf/core-site.xml里设置的value值:hdfs://localhost:9000,一直报错fuse-dfs didn't recognize hdfs://localhost:9000,-2 fuse-dfs didn't recognize /mnt/dfs,-2 )

最后ls /mnt/dfs就可以看到hdfs里的文件了

(免责声明:文章内容如涉及作品内容、版权和其它问题,请及时与我们联系,我们将在第一时间删除内容,文章内容仅供参考)

相关知识

-

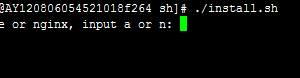

linux一键安装web环境全攻略 在linux系统中怎么一键安装web环境方法

-

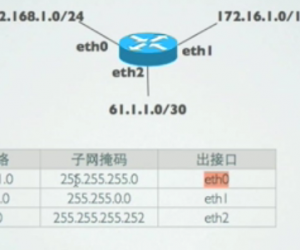

Linux网络基本网络配置方法介绍 如何配置Linux系统的网络方法

-

Linux下DNS服务器搭建详解 Linux下搭建DNS服务器和配置文件

-

对Linux进行详细的性能监控的方法 Linux 系统性能监控命令详解

-

linux系统root密码忘了怎么办 linux忘记root密码后找回密码的方法

-

Linux基本命令有哪些 Linux系统常用操作命令有哪些

-

Linux必学的网络操作命令 linux网络操作相关命令汇总

-

linux系统从入侵到提权的详细过程 linux入侵提权服务器方法技巧

-

linux系统怎么用命令切换用户登录 Linux切换用户的命令是什么

-

在linux中添加普通新用户登录 如何在Linux中添加一个新的用户

软件推荐

更多 >-

1

专为国人订制!Linux Deepin新版发布

专为国人订制!Linux Deepin新版发布2012-07-10

-

2

CentOS 6.3安装(详细图解教程)

-

3

Linux怎么查看网卡驱动?Linux下查看网卡的驱动程序

-

4

centos修改主机名命令

-

5

Ubuntu或UbuntuKyKin14.04Unity桌面风格与Gnome桌面风格的切换

-

6

FEDORA 17中设置TIGERVNC远程访问

-

7

StartOS 5.0相关介绍,新型的Linux系统!

-

8

解决vSphere Client登录linux版vCenter失败

-

9

LINUX最新提权 Exploits Linux Kernel <= 2.6.37

-

10

nginx在网站中的7层转发功能