[Linux]shell多进程并发―详细版

发布时间:2015-05-27 19:10:05作者:知识屋

业务背景

schedule.sh脚本负责调度用户轨迹工程脚本的执行,截取部分代码如下:

#!/bin/bashsource /etc/profile;export userTrackPathCollectHome=/home/pms/bigDataEngine/analysis/script/usertrack/master/pathCollect################################ 流程A################################ 验证机器搭配的相关商品数据源是否存在lines=`hadoop fs -ls /user/pms/recsys/algorithm/schedule/warehouse/ruleengine/artificial/product/$yesterday | wc -l`if [ $lines -le 0 ] ;then echo 'Error! artificial product is not exist' exit 1else echo 'artificial product is ok!!!!!!'fi# 验证机器搭配的相关商品数据源是否存在lines=`hadoop fs -ls /user/pms/recsys/algorithm/schedule/warehouse/mix/artificial/product/$yesterday | wc -l`if [ $lines -le 0 ] ;then echo 'Error! mix product is not exist' exit 1else echo 'mix product is ok!!!!!!'fi################################ 流程B################################ 生成团购信息表,目前只抓取团购ID、商品ID两项sh $userTrackPathCollectHome/scripts/extract_groupon_info.shlines=`hadoop fs -ls /user/hive/pms/extract_groupon_info | wc -l `if [ $lines -le 0 ] ;then echo 'Error! groupon info is not exist' exit 4else echo 'groupon info is ok!!!!!'fi# 生成系列商品,总文件大小在320M左右sh $userTrackPathCollectHome/scripts/extract_product_serial.shlines=`hadoop fs -ls /user/hive/pms/product_serial_id | wc -l `if [ $lines -le 0 ] ;then echo 'Error! product serial is not exist' exit 5else echo 'product serial is ok!!!!!'fi# 预处理生成extract_trfc_page_kpi表--用于按照pageId进行汇总统计所在页面的pv数、uv数sh $userTrackPathCollectHome/scripts/extract_trfc_page_kpi.sh $datelines=`hadoop fs -ls /user/hive/pms/extract_trfc_page_kpi/ds=$date | wc -l`if [ $lines -le 0 ] ;then echo 'Error! extract_trfc_page_kpi is not exist' exit 6else echo 'extract_trfc_page_kpi is ok!!!!!!'fi# 同步term_category到hive,并将前台类目转换为后台类目sh $userTrackPathCollectHome/scripts/extract_term_category.shlines=`hadoop fs -ls /user/hive/pms/temp_term_category | wc -l`if [ $lines -le 0 ] ;then echo 'Error! temp_term_category is not exist' exit 7else echo 'temp_term_category is ok!!!!!!'fi################################ 流程C################################ 生成extract_track_info表sh $userTrackPathCollectHome/scripts/extract_track_info.shlines=`hadoop fs -ls /user/hive/warehouse/extract_track_info | wc -l `if [ $lines -le 0 ] ;then echo 'Error! extract_track_info is not exist' exit 1else echo 'extract_track_info is ok!!!!!'fi...如上,整个预处理环节脚本执行完,需要耗时55分钟。

优化

上面的脚本执行流程可以分为三个流程:

流程A->流程B->流程C考虑到流程B中的每个子任务都互不影响,因此没有必要顺序执行,优化的思路是将流程B中这些互不影响的子任务并行执行。

其实linux中并没有并发执行这一特定命令,上面所说的并发执行实际上是将这些子任务放到后台执行,这样就可以实现所谓的“并发执行”,脚本改造如下:

#!/bin/bashsource /etc/profile;export userTrackPathCollectHome=/home/pms/bigDataEngine/analysis/script/usertrack/master/pathCollect################################ 流程A################################ 验证机器搭配的相关商品数据源是否存在lines=`hadoop fs -ls /user/pms/recsys/algorithm/schedule/warehouse/ruleengine/artificial/product/$yesterday | wc -l`if [ $lines -le 0 ] ;then echo 'Error! artificial product is not exist' exit 1else echo 'artificial product is ok!!!!!!'fi# 验证机器搭配的相关商品数据源是否存在lines=`hadoop fs -ls /user/pms/recsys/algorithm/schedule/warehouse/mix/artificial/product/$yesterday | wc -l`if [ $lines -le 0 ] ;then echo 'Error! mix product is not exist' exit 1else echo 'mix product is ok!!!!!!'fi################################ 流程B################################ 并发进程,生成团购信息表,目前只抓取团购ID、商品ID两项{ sh $userTrackPathCollectHome/scripts/extract_groupon_info.sh lines=`hadoop fs -ls /user/hive/pms/extract_groupon_info | wc -l ` if [ $lines -le 0 ] ;then echo 'Error! groupon info is not exist' exit 4 else echo 'groupon info is ok!!!!!' fi}&# 并发进程,生成系列商品,总文件大小在320M左右{ sh $userTrackPathCollectHome/scripts/extract_product_serial.sh lines=`hadoop fs -ls /user/hive/pms/product_serial_id | wc -l ` if [ $lines -le 0 ] ;then echo 'Error! product serial is not exist' exit 5 else echo 'product serial is ok!!!!!' fi}&# 并发进程,预处理生成extract_trfc_page_kpi表--用于按照pageId进行汇总统计所在页面的pv数、uv数{ sh $userTrackPathCollectHome/scripts/extract_trfc_page_kpi.sh $date lines=`hadoop fs -ls /user/hive/pms/extract_trfc_page_kpi/ds=$date | wc -l` if [ $lines -le 0 ] ;then echo 'Error! extract_trfc_page_kpi is not exist' exit 6 else echo 'extract_trfc_page_kpi is ok!!!!!!' fi}&# 并发进程,同步term_category到hive,并将前台类目转换为后台类目{ sh $userTrackPathCollectHome/scripts/extract_term_category.sh lines=`hadoop fs -ls /user/hive/pms/temp_term_category | wc -l` if [ $lines -le 0 ] ;then echo 'Error! temp_term_category is not exist' exit 7 else echo 'temp_term_category is ok!!!!!!' fi}&################################ 流程C################################ 等待上面所有的后台进程执行结束wait echo 'end of backend jobs above!!!!!!!!!!!!!!!!!!!!!!!!!!!!'# 生成extract_track_info表sh $userTrackPathCollectHome/scripts/extract_track_info.shlines=`hadoop fs -ls /user/hive/warehouse/extract_track_info | wc -l `if [ $lines -le 0 ] ;then echo 'Error! extract_track_info is not exist' exit 1else echo 'extract_track_info is ok!!!!!'fi上面的脚本中,将流程B中互不影响的子任务全部放到了后台执行,从而实现了“并发执行”,同时为了不破坏脚本的执行流程:

流程A->流程B->流程C就需要在流程C执行之前加上:

# 等待上面所有的后台进程执行结束wait 其目的是等待流程B的所有后台进程全部执行完成,才执行流程C

结论

结论经过优化后,脚本的执行时间,从耗时55分钟,降到了耗时15分钟,效果很显著。

相关知识

-

linux一键安装web环境全攻略 在linux系统中怎么一键安装web环境方法

-

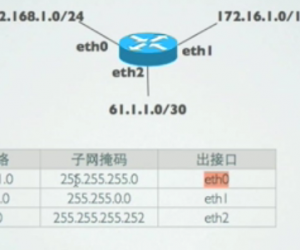

Linux网络基本网络配置方法介绍 如何配置Linux系统的网络方法

-

Linux下DNS服务器搭建详解 Linux下搭建DNS服务器和配置文件

-

对Linux进行详细的性能监控的方法 Linux 系统性能监控命令详解

-

linux系统root密码忘了怎么办 linux忘记root密码后找回密码的方法

-

Linux基本命令有哪些 Linux系统常用操作命令有哪些

-

Linux必学的网络操作命令 linux网络操作相关命令汇总

-

linux系统从入侵到提权的详细过程 linux入侵提权服务器方法技巧

-

linux系统怎么用命令切换用户登录 Linux切换用户的命令是什么

-

在linux中添加普通新用户登录 如何在Linux中添加一个新的用户

软件推荐

更多 >-

1

专为国人订制!Linux Deepin新版发布

专为国人订制!Linux Deepin新版发布2012-07-10

-

2

CentOS 6.3安装(详细图解教程)

-

3

Linux怎么查看网卡驱动?Linux下查看网卡的驱动程序

-

4

centos修改主机名命令

-

5

Ubuntu或UbuntuKyKin14.04Unity桌面风格与Gnome桌面风格的切换

-

6

FEDORA 17中设置TIGERVNC远程访问

-

7

StartOS 5.0相关介绍,新型的Linux系统!

-

8

解决vSphere Client登录linux版vCenter失败

-

9

LINUX最新提权 Exploits Linux Kernel <= 2.6.37

-

10

nginx在网站中的7层转发功能